If you use social media, you’ve probably seen a friend share misleading or downright false information. When it comes to certain topics, like COVID-19, that misinformation can be dangerous. To combat myths, Facebook says it’s going to start telling you if you’ve interacted with a COVID-19 hoax or misinformation. The tech company says you may begin to see warnings in your News Feed in the next few weeks.

According to a post by Guy Rosen, Facebook’s VP of Integrity, the company works with over 60 fact-checking organizations in over 50 languages worldwide. That includes eight new partners since March.

Per the blog:

“Once a piece of content is rated false by fact-checkers, we reduce its distribution and show warning labels with more context. Based on one fact-check, we’re able to kick off similarity detection methods that identify duplicates of debunked stories. For example, during the month of March, we displayed warnings on about 40 million posts related to COVID-19 on Facebook, based on around 4,000 articles by our independent fact-checking partners. When people saw those warning labels, 95% of the time they did not go on to view the original content.”

Rosen cited examples including posts that claimed drinking bleach would cure COVID-19 and that physical distancing doesn’t work to mitigate its spread.

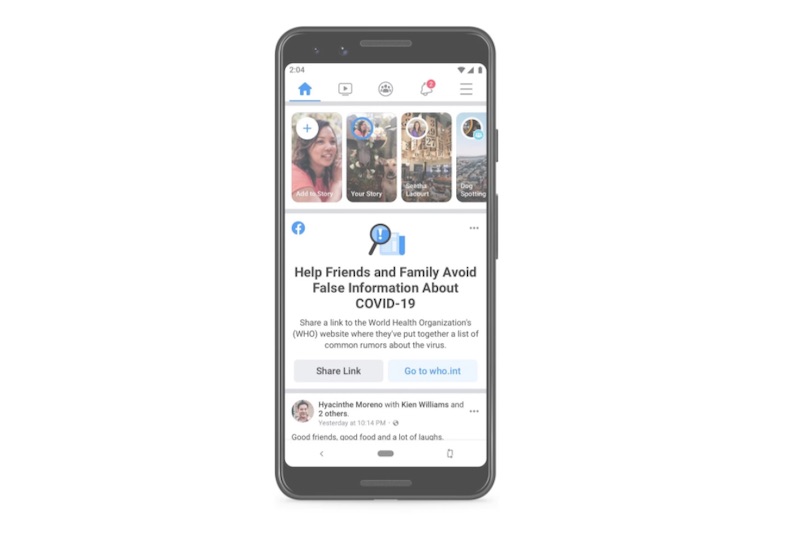

Within the next few weeks, you may see a warning message in News Feed if you’ve interacted with a piece of content deemed to be misinformation, or a prompt asking you to share accurate information from the World Health Organization (WHO) with your friends. You may have already seen a banner that directs you to a COVID-19 resources page, found here.

Facebook’s actions follow a study from global activism nonprofit Avaaz. They reviewed a sample of misinformation only to find it was shared over 1.7 million times and viewed about 117 million times. The study also found that it could take Facebook up to 22 days to downgrade or place a warning label on such content. Facebook also had an earlier issue with deleting COVID-19 posts that weren’t misinformation—including articles from reputable news sources and posts organizing donations—which the platform attributed to a bug.

So, keeping in mind that these huge platforms have the capacity to spread information, but not always the dexterity to make sure it’s all good information, you should still be careful when reading or sharing articles, posts, or claims. This article from The Guardian suggests that most people actually share fake news not because they want to or can’t discern that it’s fake, but because we’re too distracted when we hit share.

Meanwhile, other platforms are also attempting to battle coronavirus misinformation, including Twitter and Reddit, TechCrunch reports. And the City of Los Angeles has a page of locally relevant myths it’s dispelled, including info on citations and the Safer at Home order.

advertisements